What do you get when you mix a legacy Airflow stack, a mountain of AWS services, and a news dashboard that takes an hour to refresh?

Frustrated engineers, slow feedback loops, and a ton of missed opportunities.

This is how Mediaset—one of Europe’s largest private broadcasters—went from “it works, but don’t touch it” to a modern, Python-native data stack built with Temporal and Bauplan. In just a few weeks, they shipped a near real-time dashboard that updates in minutes instead of hours.

The Setup: Video Views, Petabytes, and Plenty of Pain#

Mediaset digital by the numbers (2024):

- 24 million registered users (nearly half of Italy’s population)

- 10 billion video views (roughly 25 million views per day)

- 76 petabytes of data processed

Their original data stack looked like a greatest-hits collection of AWS services: EMR, Glue, Athena, Redshift, plus Airflow duct-taped on top. Building just one internal dashboard for TGCOM24—their flagship news property—took three months, required a team of senior engineers and consultants, and still had a frustrating one-hour data lag.

Rebuilding from First Principles#

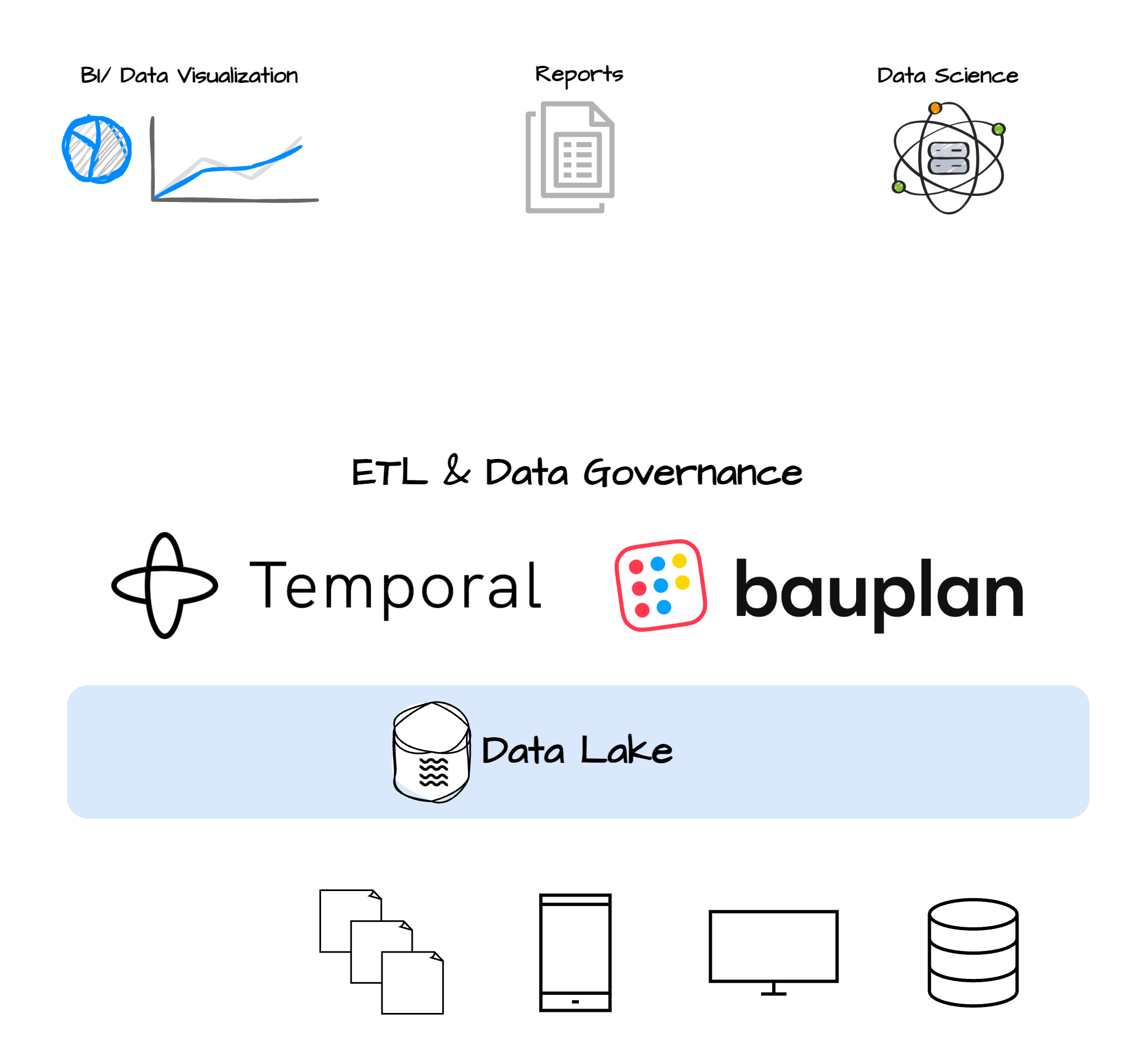

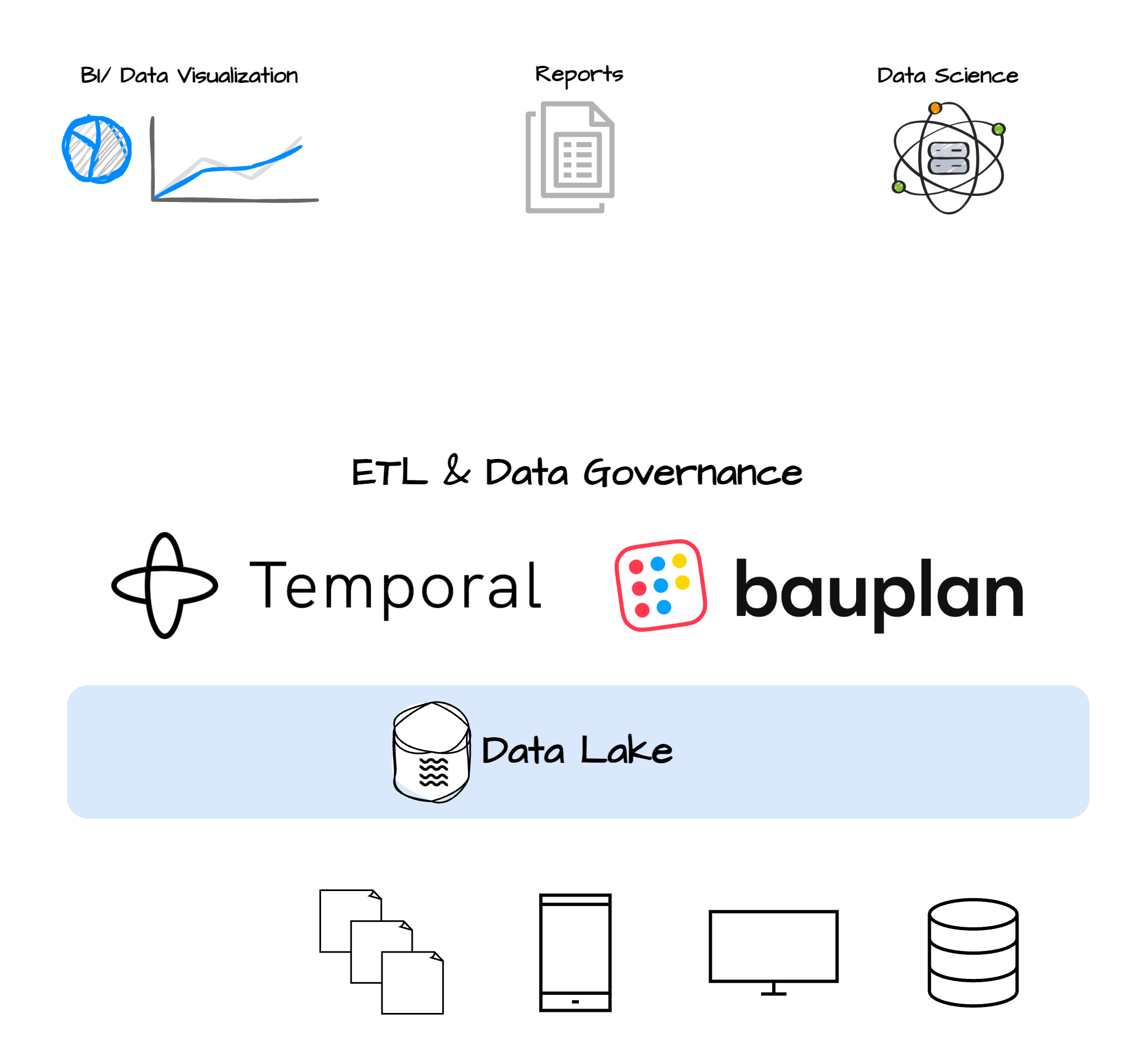

Rather than another temporary fix, the Mediaset team decided to start fresh. Here’s what changed:

- Airflow → Temporal for orchestration

-

EMR + Glue + Athena + Redshift → Bauplan serverless platform for data management and pipelines.

The goal wasn’t just a tech upgrade. It was to build a simpler, more reliable system that could be developed and maintained by any competent engineering and data science team without needing deep infra expertise.

What Bauplan Does, and Why It Beats the Traditional AWS Stack#

Bauplan is a serverless platform specifically built for data and AI workloads. It’s designed for developers who want to ship fast without wrangling infra.

Here’s what it replaces:

- EMR → no more cluster management

- Glue → no more boilerplate job authoring

- Athena/Redshift → no need for a data warehouse

Instead, you write Python functions, chain them together to build pipelines and run them as serverless functions in the cloud and get nicely typed objects back (boto3, looking at you!):

client.create_branch("my_dev_branch")

client.import_data("s3://my-bucket")

client.run("quality_pipeline")

client.merge("my_dev_branch", "main")

Data remains securely in your S3 buckets, stored in open formats like Iceberg. There’s no lock-ins, no data copies, and no hidden compute bills. You treat your data like code: versioned, testable, and portable.

What Temporal Does, and Why It’s Better than Airflow#

Temporal is a workflow engine built for reliability. It handles retries, failures, and orchestration state without losing state. Why Mediaset picked it over Airflow:

- Durable, replayable Workflows with automatic retries

- Everything is code—no YAML, no flaky UI debugging

- Built-in support for scheduling, signals, and complex flows

Airflow is fine when it works. But when it breaks (and it will), debugging DAGs across multiple AWS services becomes a full-time job. Temporal just works.

Real-world Results#

After switching to Temporal and Bauplan:

- Data freshness improved from 1 hour → 5 minutes

- Dev cycles shrank from 3 months → 6 weeks

- Infrastructure complexity went from 6+ AWS services → 2 tools

The new stack enabled fast iteration, clean interfaces, and resilient workflows—without requiring a team of platform specialists or months of onboarding.

Why It Works#

Temporal manages orchestration, and Bauplan handles the data. Together, they give you a full CI/CD workflow for your pipelines, minus the traditional complexity of big data stacks. You write Python. You commit your changes. You ship. The dashboard was just the beginning. Mediaset is now rolling out even more ambitious use cases:

- AI pipelines with LLMs for content summarization

- Real-time ad optimization

- A full migration away from legacy tooling

Learn even more about the Mediaset journey and try Temporal for yourself. Watch the talk, try Bauplan for free, and sign up for Temporal Cloud and get $1000 in free credits.