Simple question: Is Temporal an AI product/technology?#

More nuanced answer: Yes! What we built is perfectly suited for AI applications, even if it was originally built for other use cases.

Temporal is built to bring resilience — or as we like to call it, Durable Execution — to distributed systems. For example, it makes all of these things more resilient:

- Your website, powered by dozens or hundreds of microservices.

- Your order processing application that communicates with everything from payment processors to shipping and inventory management systems.

- The system you use to reliably generate credit card statements for your millions of card members.

These, and many others, are the use cases that Temporal was originally built for, well before generative AI burst onto the scene in 2022. And now that the industry is to the point where AI agents are moving from the experimentation phase into production, it turns out Temporal is the perfect technology to implement your LLM-powered AI applications and agents.

I've already spoken about the fact that AI applications and agents are distributed systems. I even suggest they are distributed systems on steroids because your app may end up making an order of magnitude more remote requests to fulfill a user experience. Just like the cloud-native applications of the last couple of decades, AI apps need to operate more reliably even though transient failures in the underlying infrastructure are common. I also captured my mental model for agents, which helped set a foundation for what I do in this piece.

But it’s time for more detail. How exactly does Temporal satisfy the needs of this class of applications? As it happens, Temporal has an answer for every one of the key elements of an AI application or agent.

The key elements of a generative AI–powered application#

At the most basic level, Gen-AI powered applications are those that leverage an LLM to fulfill part of their functionality. The LLM alone does not make up the app. Even ChatGPT (which is an app, not an LLM), while simple, is an app that invokes an LLM, displays its response to the user, accepts input from that user, adds that input to the running history of the chat, and then invokes the LLM again.

Of course, applications come in many shapes and sizes (it’s not even the case that every UX for a generative AI–powered application includes a chat interface), but at their core, they combine LLMs with actions to deliver some experience.

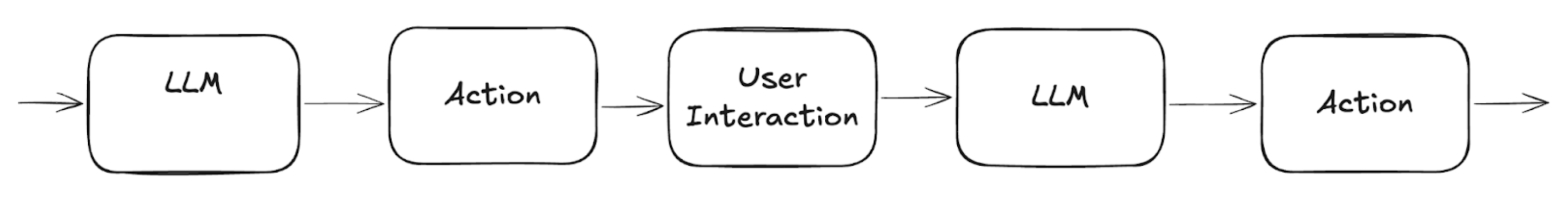

For example, you might use an LLM to process a Slack message sent to your #it-support channel, have it file an appropriate ticket in ServiceNow, wait for human approval, and then provision some infrastructure. That might look something like this:

Chain workflow

where a series of LLM calls, actions, and user interactions are strung together.

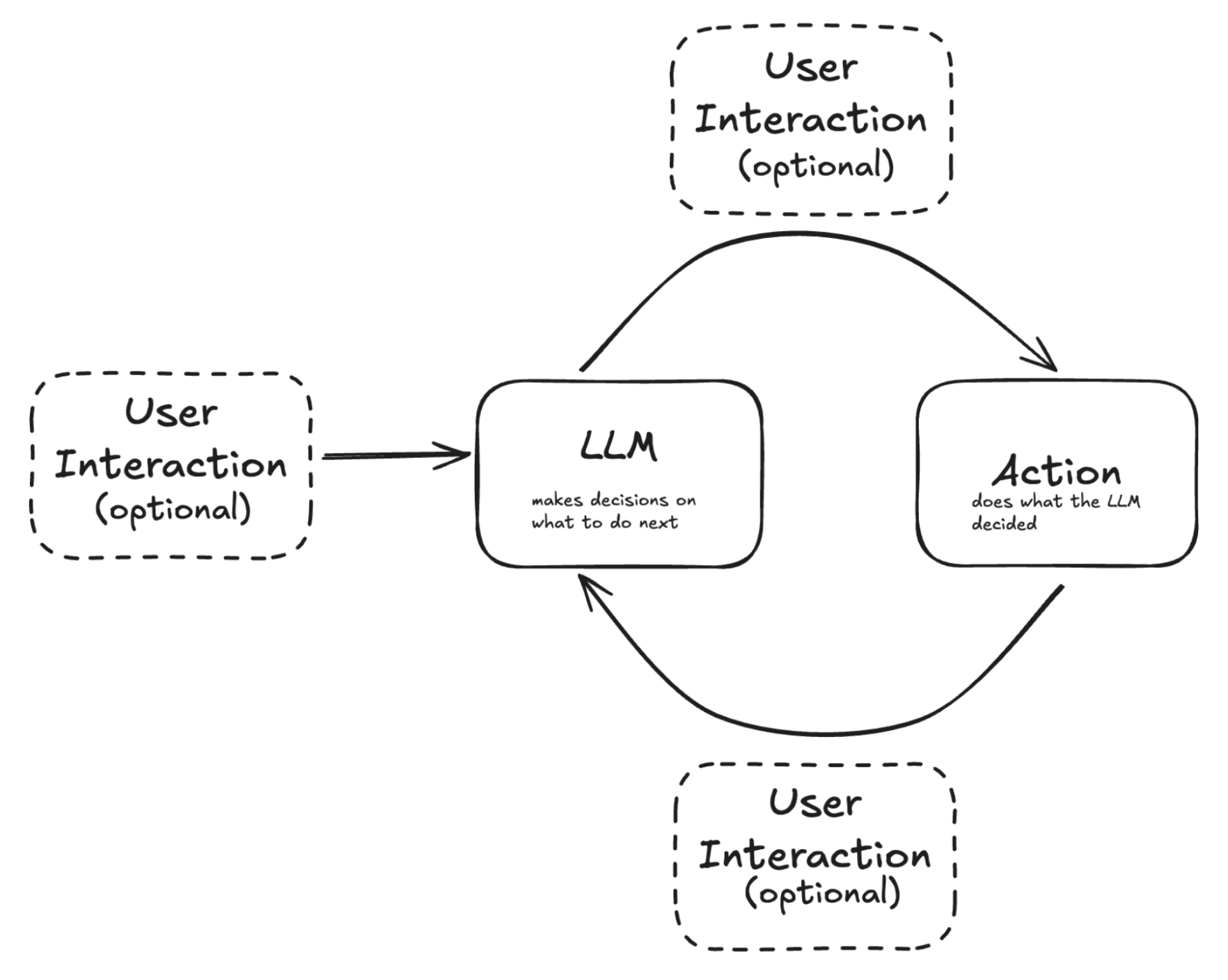

Or it may look something like this:

Loop workflow

where the exact trajectory through the business logic is not known at design time but is determined by the system itself, specifically with the LLM being used to drive the flow.

In both cases, the primary elements of the solution are the same and are well-addressed by Temporal. This table offers an overview — a bit of a cheatsheet. On the left, we’ve bolded the terms most commonly used to describe a key element of AI applications. On the right, we briefly describe how Temporal satisfies the need. Think of this as a description of Temporal in today’s AI lingua franca. In the section that follows, we’ll cover each concept in more detail.

| Key element in AI | How Temporal addresses it |

|---|---|

| We stitch together a bunch of steps into chains, graphs or agentic loops. | This is a Temporal Workflow. Workflows are equally well-suited to designs that structure steps at design time (first diagram) as those that are dynamic (second diagram). |

| We use Large Language Models (LLMs). | You invoke these through Temporal Activities, delivering resilience out of the box. |

| We invoke tools, craft prompts, and access resources. We also invoke MCP servers via MCP clients. |

You invoke these through Temporal Activities, delivering resilience out of the box. When tools are MCP servers, the MCP client is implemented within an Activity. |

| We implement MCP servers. | These are implemented as Temporal Workflows and Activities. |

| AI applications, especially AI agents, are responsible for providing memory. | You just manage your application state in variables in your Workflow. As an added bonus, with Temporal, that state is durable. |

| We use checkpointing to keep from having to rerun steps if a process crashes. | Temporal delivers this implicitly through its event sourcing and state management architecture. You never have to think about checkpointing. |

| We must allow for humans in the loop. | This is achieved through Temporal Signals & Updates, and Temporal Queries. |

| AI applications are often long-running. | In addition to its event sourcing and state management foundation, Temporal handles long-running processes through its Worker architecture. |

Let’s take a closer look#

Now, with this very high-level overview showing the alignment of Temporal with the needs of an AI application or agent, let’s dive deeper into each element. Understanding these building blocks — and how Temporal handles them — will not only deepen your understanding, but I also hope it will help you get started quickly.

- Chain, graph or agentic loop: These are all terms used to describe how the boxes in the diagrams above are composed.

- Chaining of steps was a term popularized by LangChain through their first offering, one that allowed various steps in an application to be chained together, with outputs from one step flowing as inputs to the next. As depicted in the first diagram, a chain is used to define a predetermined, linear flow.

- Agentic loop is the term used to describe the cycle shown in the second diagram. In this model, the LLM is used to drive the actions that happen on every cycle, and the LLM is also used to determine when a goal has been reached and the cycle is exited.

- Clearly, you can model either of these approaches as a graph. Graphs are also sometimes used to model more complex, yet still predetermined, flows — think chains with added branches and loops.

When using Temporal to build your AI application, the predetermined flow (simple or complex), or the agentic loop is implemented as a Temporal Workflow. It’s just regular code, providing the most powerful and familiar model for orchestrating components — LLMs, Actions, and UX — into a business application. You can program in your favorite language1, so there is no new language or DSL to learn. Since it’s a general-purpose programming language, there are no abstractions to get in your way. AI application patterns will, without question, continue to evolve in the coming months and years, and we are confident that these general-purpose programming languages will be as well-suited to implement new patterns as they are suited to satisfy the current ones.

- Large (or Small) Language Model (LLM/SLM): While the applications we speak of here obviously include the use of an LLM, it is still worth calling it out explicitly, not only for completeness but also because your application is responsible for interfacing with the LLM:

- The LLMs your application utilizes could be in the cloud, within your own corporate network, or even locally on your machine (these would be the smaller models I hinted at above). In virtually all cases, they are running in processes outside of your application’s main orchestration.

- While at the high level, all LLMs operate by taking in tokens and outputting tokens, their models differ, and the way that the input tokens are structured can impact their qualitative performance. The application you are writing is responsible for interfacing with the LLM, possibly structuring the input for the best performance.

When using Temporal to build your AI application, the Workflow will interact with an LLM via a Temporal Activity. A Temporal Activity is code (again, written in your favorite language) that implicitly delivers resilience for distributed systems, and it gives you full control over any logic needed around the LLM invocation.

For example, when a network glitch renders the LLM unavailable or when the LLM is rate-limiting requests, the Activity automatically retries those requests until conditions allow for completion. As the developer, you don’t write the retry logic; that behavior is handled by Temporal. And because Temporal is optimized for distributed systems, retries (and other resilience patterns) are designed to address a wide range of failure scenarios.

And because an Activity effectively brokers the LLM invocation, you can structure it as a gateway of sorts, adding logic that, for example, redacts any Personally Identifying Information (PII) before sending it on to a third-party LLM.

- Actions or Tools: This term represents the actions that the AI application or agent will take, sometimes at the direction of the LLM and sometimes as a step in a predetermined flow. Very often, this will result in the invocation of a downstream API or the access of external data stores. Tool was the first term popularized, albeit with a rather vague definition, but the emergence of the Model Context Protocol (MCP) has brought more specificity. MCP defines protocols for interfacing with the following types of entities:

- Tool: Invoking an API, calling a function, or otherwise interfacing with outside systems.

- Data Source or Resource: Read/write access to data, either locally or in a remote location.

- Prompt: Prompt templates that are used to provide direction to the LLM in the next invocation.

- MCP servers: These are services that implement the functionality of a tool or access to a resource.

When using Temporal to build your AI application, tools and resources are accessed via a Temporal Activity. The Workflow — which orchestrates the operations of your application’s operations by calling LLMs, invoking tools, and allowing for user interaction — is all running within a single process and is therefore not subject to the challenges posed when calls are made to external systems. But Temporal Activities are explicitly designed to make external calls resilient.

Just like Workflows, you build your Activity as code that invokes the downstream API or accesses external data sources. Temporal Activities implement a host of durability features so that when, for example, a network outage temporarily renders an API inaccessible, the Activity will automatically retry it without the developer having to handle the case explicitly.

Activities may be written for specific downstream APIs: for example, you may create a FetchWeather activity that makes a REST call to the national weather service. Alternatively, Temporal supports dynamic Activities where the details of the invocation are supplied as arguments to the Activity. This latter approach is particularly useful when your AI agent is built in the form of the second diagram above. If your Workflow calls an MCP server, the Activity is the place where the MCP client is implemented.

Finally, MCP servers themselves implement logic that also very often includes calls to external systems. Temporal Workflows and Activities are an ideal implementation for MCP servers.

- Memory: LLMs are forgetful — that is, they are stateless. Each time they are invoked, they receive entirely fresh input as a sequence of tokens and generate entirely new output. It is the job of the AI application to remember; to manage the state of the application by keeping track of inputs to and outputs from previous steps, and any relevant resources and prompts, so that it may properly assemble them for every LLM invocation.

When using Temporal to build your AI application, you simply use variables in your Workflow code to store the needed state. You have full control over what data to store, how to store it, and how it is assembled for input to the LLM. This works for flows that are fully specified at design time, as well as for the agentic loops that are driven by the LLM at runtime. There is nothing new to learn, and the approach is sure to work for the yet-to-be-invented, popular AI application patterns.

- Checkpointing: AI applications beyond the simplest of demos make many calls to LLMs and tools, and they are increasingly leveraging other agents. In the event that the application stops before completion (due to both foreseen and unforeseen causes), checkpointing keeps the application from having to start from the beginning and rerun previous steps in the workflow.

While Temporal is already showing well against the key elements we’ve covered so far, when it comes to checkpointing, Temporal really shines!! (And wait until you see what’s in store in the long-running section below)

You see, when Temporal executes a Workflow, it records a full Event History — every single time code in the Workflow is run, every single time an Activity is called or returned, and more. Plus, it records the values returned by every single Activity call, which means the memory we just spoke about is completely visible and debuggable through Temporal tooling. The outcome that checkpointing delivers in other frameworks is realized through the event sourcing and state management features that form the foundation of Temporal. As the developer, you are not responsible for implementing any part of this protocol. By structuring your apps as Temporal Workflows and Activities, you get this behavior for free.

If the application instance were to shut down (crash, be proactively cycled, or because you wanted to fix a bug even while the app was running), as soon as the application starts again, the state will be recreated and processing will pick up where it left off. When building Temporal applications, you never have to think about checkpoints. Your mental model is simply that if the application goes down, it will pick up where it left off when it is brought back up. We call this Durable Execution.

- Human-in-the-loop: While some AI applications may operate entirely autonomously, many (if not most) will have humans that interact with them. They may provide input on launch and at various points throughout the execution, including within an agentic loop.

When using Temporal to build your AI application, you use Signals and Updates to supply input and Queries to extract state to show the user. These are primary abstractions in the Temporal programming model, and just as with Workflows and activities, you implement these in your programming language of choice. (Insert usual refrain on general-purpose programming languages giving you full flexibility to implement any logic you require. 😀) The protocols that invoke these methods, which are programmed idiomatically in each of the programming languages Temporal supports, are delivered in the Temporal SDK.

- Long-running: Long-running apps are not unique to those leveraging LLMs, yet because AI is allowing more and more functionality to be handled autonomously, the amount of work done in one application session is only increasing. That is, long-running applications are becoming ever more commonplace. Checkpointing is certainly part of delivering a good user experience over timelines that may last hours, days, weeks, or even months and years. But beyond maintaining stability for long periods, applications also need to make efficient use of computing resources.

We already covered how Temporal delivers on checkpointing, so let’s turn to the question of efficient resource utilization. When using Temporal to build your long-running AI application, efficient resource utilization is delivered through the Worker architecture that is at the core of Temporal.

A Temporal Worker is the process within which your Workflow and Activity code runs. It looks after many concurrently running Workflows, and — key to the questions around long-running Workflows — it manages which are currently in active memory and which are not active but in cache. For those that have been evicted from the cache, it replays the Event History to reconstitute the application state for further processing (see checkpointing explanation above). So solid is the implementation that Workers can be tuned to efficiently look after hundreds or thousands of Workflows at once. Workers may also be sized and tuned for particular workload types.

As a developer, you can simply write Workflows as if they stayed active in memory at all times. Gone are the days when you had to fragment your business logic into separate pieces purely because of temporal constraints (pun definitely intended! 😁)

Building for what’s next#

I didn’t set out to write a blog about distributed systems patterns, but here we are. The thing is, once you start building real AI applications and agents, you quickly realize you’re not just wrangling LLMs — you’re orchestrating complex, multi-step, failure-prone distributed systems, PLUS you’ve got humans engaged throughout the workings of that complex system.

These distributed systems are powering the experiences you are delivering to your users, and the fact that they are delivered through a totally new paradigm (AI) doesn’t lessen the users’ expectation that they just work.

The AI landscape is moving fast, and new patterns are emerging every month. But here’s my bet: whatever comes next — whether it’s multi-agent orchestration, hybrid human-AI workflows, or something we haven’t even thought of yet — it’s going to need rock-solid distributed systems underneath. And that’s exactly what Temporal gives you: real code, in real programming languages, handling real complexity.

As AI applications continue to evolve, the need for resilient, scalable execution becomes even more critical. Curious to see how Temporal can streamline your generative AI workflows in production? Sign up for Temporal Cloud today and get $1,000 in free credits to start building with confidence.

Footnotes#

-

Python, Java, Go, Typescript, .NET, Ruby, PHP ↩